There’s a story I like to tell, which I vaguely remembered as originating at Bell Labs or Xerox PARC. A researcher had a rubber duck in his office. When he found himself stumped on a problem, he would pick up the duck, walk over to a colleague, and ask them to hold the duck. He would proceed to explain the problem, often realizing the solution himself in the middle of the explanation. Then he would say, “Thank you for holding my duck”, and leave.

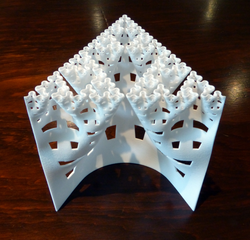

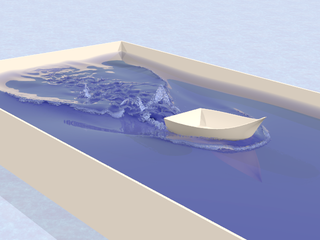

TensorFlow

TensorFlow

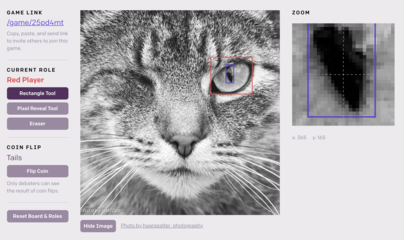

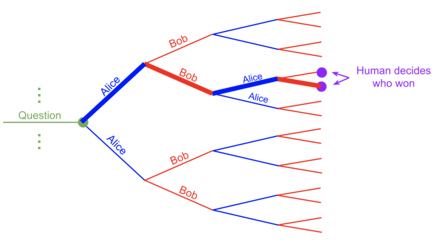

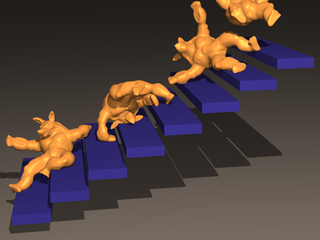

Eddy

Eddy

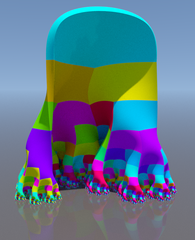

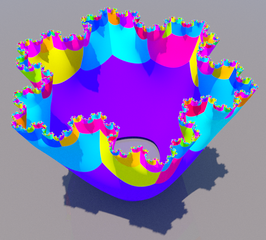

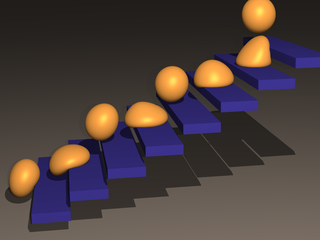

Geode

Geode